Let’s have a look at the humble pixel. The term pixel, short for “picture element,” is the smallest visible unit of a digital image. It is the building block of what is seen on screen and what is output to the printer. These tiny squares, when combined, form an image on a screen or other digital medium. Pixels reside on a grid in which matrix determines how much detail the image contains. Each pixel represents a single point of light in an image and can display a specific color and brightness.

Pixel facts

Pixels are used in various contexts — on images, monitors, and sensors. A pixel’s color is usually determined by combining values of red, green, and blue light. When these three hues are overlaid in varying intensities, they can potentially create a wide range of colors.

Resolution

There are three types of resolutions that are essential for understanding how digital images work. Image resolution describes pixels in digital photos or graphics. It is measured by the number of pixels per linear inch (PPI) in an image. The more pixels there are in an image, the more detail can be displayed or printed. 300 PPI images are usually used for print output to achieve a high level of detail and sharpness. 72 PPI images are used for web and video due to their smaller file sizes. (Fig. 1)

Screen resolution, on digital displays like phones, TVs, and monitors, counts the number of pixels on a fixed screen. If you look closely at a monitor with a magnifying-glass, you will see a grid of tiny squares. Each one of these squares independently displays colored light. The size of a screen pixel depends on the resolution and physical size of the display. Higher resolutions on the same screen size result in smaller pixels, leading to sharper images. The pixel density is also measured in PPI and indicates how many pixels fit within a given inch of the screen. Higher pixel densities produce finer detail.

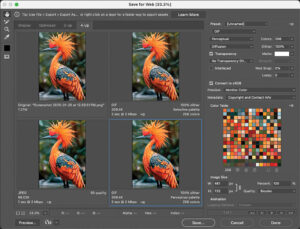

Sensor resolution is the ability of light sensors on scanners and digital cameras to capture light to create images. They are usually configured in an array of panels that capture and separate the red, green, and blue color data. (Fig. 2)

Printer resolution measured in dots per inch (DPI) indicates the number of ink dots that a printhead can produce. The more dots the finer the print.

Bit depth

Now that we’ve analyzed how pixels fit into the scheme of digital images, let’s dissect the pixel and see what’s inside. Bit depth refers to the number of bits used to represent the color or brightness information of a single pixel in a digital image. It determines the range of colors or shades that can be displayed or captured, impacting the range of colors, shades, or detail.

Images are computed using a binary system of numerical data. In this system, there are two numbers used to describe color: zeros and ones. Let’s say that each number represents a light switch that can be turned on or off. Zero equals off, and one equals on. Images that contain 1 bit of information or a bitmap image can support two colors: black (off) or white (on). Images like this are frequently used for line art where there are areas of only white or black. (Fig. 3)

Grayscale

Grayscale images have a range of tonality from the very darkest (black) to the midtones (gray) to the brightest (white). These images contain 8 bits of information in each pixel. More information, more colors! Each bit is either zero or one — on or off (remember our on/off switches). Two multiplied by itself eight times equals 256 (28 = 256). That is the number of shades of gray that potentially produce a wide range of tonality, rendering shadows, midtones, and highlights with precise detail. On screen and in print, these images resemble black and white photos (Fig. 4).

Indexed color

Indexed color (IC) is a method of representing images using a limited color table and an indexing system to save space and simplify processing. While IC is efficient for graphics with fewer colors, it’s not suitable for complex or high-resolution images where color accuracy and detail are essential.

The IC model is primarily used to reduce file size and simplify color representation by limiting the number of colors in an image. Instead of storing full color information for each pixel, as with RGB values, IC uses a table that points to a predefined list of colors.

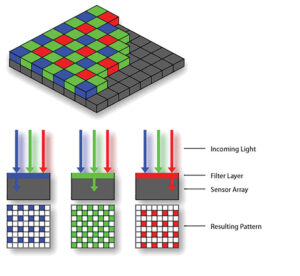

The characteristics of IC include a color table (Fig. 5) that is a collection of specific colors used in the image. Each color in the palette is stored with its corresponding RGB (or other color model) values.

Instead of storing the full color value, a pixel index is used. Each pixel contains an index that refers to a specific color in the table. For example, pixel value three might point to the fourth color in the table, which could be a shade of red (e.g., RGB: 225, 35, 35).

The number of colors in the palette depends on the bit depth. The maximum indexed color, 8 bit IC, supports up to 256 colors (28 = 256). 4 bit IC supports up to 16 colors (24 = 16).

Advantages

The main advantage of converting an image to IC is to reduce file size. Since each pixel stores an index instead of the full color information, IC images are more compact, saving storage space and bandwidth. IC images are commonly used in web graphics to reduce file size, making them suitable for animations and simple graphics on websites. Common web formats like GIF and PNG use color tables to configure images (Fig. 6). IC is also used in 2D game apps where file size and simplicity are critical, and in images with a limited color range, such as logos, icons, or line art.

Limitations

IC images can only use colors present in the palette, limiting their ability to represent detailed, high-quality photos or images with smooth gradients. Editing IC images can be challenging since the color palette is fixed. Adding new colors often requires redefining the palette. Color photographs that have millions of subtle color variations are not well-suited for IC because it compromises visual fidelity.

RGB

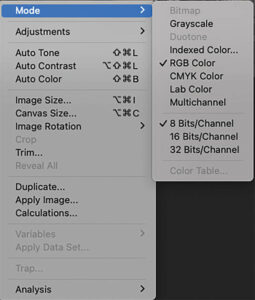

To explain how color images are produced, we need to present the concept of color channels. All digital images contain at least one color channel. Bitmap, grayscale, and indexed color images have a single channel, but colored images are composed three or more channels. RGB, the most common color model, contains a red, green, and blue channel. (Fig. 7) The channels separate the color information gathered by the sensors on a scanner or digital camera. Each color channel is actually a grayscale image with the potential of containing 256 shades of light. When these channels are super-imposed upon each other, they can potentially produce 16,777,216 colors, or (2563).

HDR

High bit images such as 16 bit color images contain 16 bits of color information per pixel per channel and can generate more than 35 billion colors. 32 bit images called high dynamic range (HDR) have capability of producing trillions of colors. More color information in the image produces smoother color transitions and more detail in the shadows and highlights at the expense of much larger file sizes.

Many photographers and graphic professionals claim that images scanned or captured in 16 or 32 bit color have superior color relationships when they are converted to 8 bit color for output.

The bottom line is that monitors and printers cannot recognize billions or trillion of colors and therefore 16 and 32 bit images need to be converted to 8 bit in the image editing software prior to publishing. (Fig. 8) This is where the claim of the photographers and graphic professionals is put to the test and where you should decide whether it’s worth the effort. In general, most of your full-color images will be RGBs but remember that higher bit depths lead to smoother gradients, better color accuracy, and more realistic rendering. Low bit-depth and low-resolution images can result in banding and pixelization, where smooth transitions between colors or shades become abrupt or ragged.

Quality versus efficiency

In the digital graphics workflow, the quality of your image depends on how much information those tiny pixels contain. Bit depth determines how your image on screen and in print will be realized. For efficiency sake it may be necessary to assign smaller bit depths and resolutions to reduce file size and speed up the download time when publishing images to video or the web. For print however, maintaining the ideal resolution and millions (or more) colors will help assure the quality of your output.